Summarize this article with

Open a role. Post the job. Step away for the night. By morning, hundreds of applications are waiting. By the end of the week, there are thousands. Since 2021, application volume has nearly doubled, even as recruiting teams have gotten smaller.

At first, it looks like demand. More people are looking for work and are interested in your open roles. Great! But once you start digging into those fresh new resumes, you realize not all of that activity is coming from real candidates.

Alongside genuine applicants, there’s been a rise in automated and malicious job applications. Sometimes it’s mass-submission tools flooding applicant tracking systems (ATS); other times it’s bad actors trying to get hired specifically to gain access to internal systems and steal intellectual property. Either way, ATS pipelines fill up fast, and legitimate applications get buried in the noise.

For recruiting teams, the impact is immediate: wasted time, slower hiring cycles, and less trust in pipeline data. For fraud and engineering teams, the challenge runs deeper. Traditional ATS controls weren’t designed to detect automation that spreads across sessions, presenting as a steady stream of “new” applicants.

This article focuses on application fraud, the automated abuse that happens during the application process itself, not candidate fraud, which typically shows up later during interviews or onboarding.

I’ll break down why job application fraud is increasing, how to recognize when an application was submitted using automation, and the practical steps teams can take to detect and stop it early without turning away real candidates.

Why job application fraud is increasing

Job application fraud isn’t about landing interviews for their own sake. It’s about access and money. Some attackers are after fast payouts, getting hired just long enough to redirect payroll, expense reimbursements, or signing bonuses. Others are playing a longer game, using fake or automated applications to secure roles that grant access to customer data, source code, internal tools, or proprietary processes. That access can be monetized directly through data theft, malware placement, or business email compromise, or reused later through harvested credentials and internal workflows. In rarer cases, the goal is quiet, long-term access, embedding inside companies for surveillance or leverage rather than immediate profit.

What’s changed is how easy it has become to pursue those goals at scale. Job application systems are generally open, automated, and low-risk, making them easy places to test and repeat abuse. Automated tooling and generative AI make it easy to generate convincing resumes, tailor applications, and submit them in bulk. Hiring pipelines are optimized for speed and volume, not adversarial behavior, which makes it easier for bad actors to blend in, test what works, and repeat successful patterns across companies and platforms with little resistance.

The cost goes beyond higher application volume. Recruiting teams spend more time reviewing low-quality submissions, hiring timelines stretch, and pipeline data becomes less reliable. This makes it harder to prioritize real candidates and make confident hiring decisions. The impact is already becoming clear. Gartner projects that by 2028, one in four job applications will be fake, driven mainly by automation and AI-assisted abuse.

Signs your ATS is seeing automated fraud

Bot-driven application fraud rarely announces itself outright. More often, it shows up as a series of small but persistent patterns that feel “off” long before teams can pinpoint the cause.

These are some of the most common warning signs.

Sign #1: Sudden spikes that don’t match hiring activity

Application volume jumps sharply, with no clear trigger. There’s no new role posted, no campaign launch, and no seasonal hiring push. The spike may hit overnight or arrive in short bursts, then repeat days later. Legitimate interest tends to rise gradually, while automated submissions tend to arrive all at once.

Sign #2: Applications that move too fast and look too similar

Screening questions are completed in seconds, and resumes across many submissions share the same structure, phrasing, or formatting. Even when candidates appear qualified on paper, the applications feel interchangeable. These patterns often point to automated tools filling out forms at machine speed rather than real candidates applying thoughtfully.

Sign #3: Funnel metrics start to break down

As low-quality applications pile up, the effects show up across the funnel. More candidates drop out after initial screens. Interview conversion rates decline. Recruiters spend more time reviewing applications but move fewer candidates forward. Over time, teams lose confidence in what their pipeline data is actually telling them.

Why traditional ATS controls fail against application bots

Most ATS platforms include protections designed to prevent abuse. Tools like CAPTCHAs, rate limits, and basic form validations can stop simple spam. Identity verification (IDV) also plays an important role by confirming that a real person exists and is present at a specific moment. The limitation is that these controls evaluate individual interactions in isolation, without strong signals about the browser or device behind them.

Application bots take advantage of that gap. They spread activity across sessions, rotate environments, and return repeatedly over time, with each submission appearing independent and legitimate. A CAPTCHA might be solved once. A rate limit might be avoided by slowing down submissions. An IDV check might never trigger if it sits later in the funnel. Without a reliable way to understand whether multiple applications are coming from the same automated source, these submissions blend into normal traffic.

As a result, automated submissions can move through early stages undetected, piling up before teams realize what’s happening. By the time the problem becomes visible, recruiters are already sorting through low-quality applications, and fraud teams are left reacting instead of preventing. To stop bot-driven job application fraud earlier, teams need accurate signals and help distinguish real candidates from automation before the funnel breaks down.

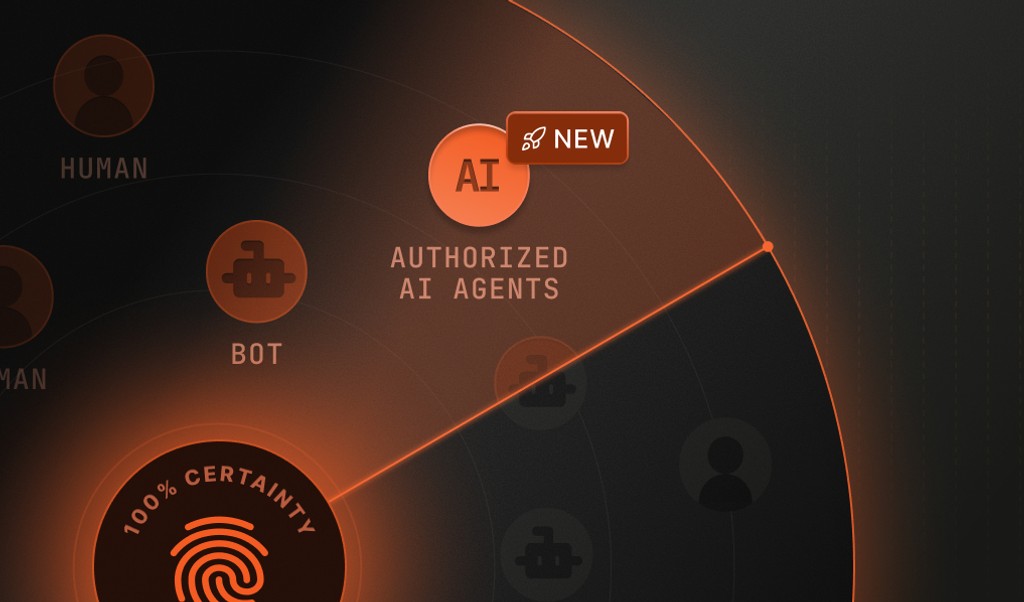

How Fingerprint ensures accurate detection

Traditional ATS platform controls often evaluate individual moments in isolation.

Fingerprint adds additional context by analyzing signals from the browser, device, and network as applications are submitted. Through Smart Signals such as bot detection, proxy detection, and browser tampering, teams can identify suspicious activity in real time, even when automated tools rotate IP addresses, slow down submissions, or pass basic challenges.

Additionally, Fingerprint uses device intelligence to identify returning visitors and assign a stable visitor ID to each browser or device, making it possible to link otherwise separate applications back to the same browser or device. That visibility helps teams spot repeated or automated submissions early, before application volume spikes and pipeline quality declines.

Fingerprint works alongside existing ATS platform controls; it doesn’t replace tools like identity verification. It strengthens them. Teams can use the bot detection signal and visitor ID at the point of submission to block or flag automated applications, reduce noise, and apply friction only when risk is high, while keeping the experience smooth for legitimate candidates.

Steps to detect bot-driven application spam

Stopping bot-driven application fraud starts with recognizing how automation shows up during the application process. The following steps outline a practical approach teams can take to detect automated activity early, before it overwhelms the hiring pipeline.

Step 1: Look for repeated behavior across application attempts

Even without deep historical analysis, repeated behavior can surface quickly. When multiple applications follow identical paths, complete forms with the same timing, or originate from the same device, it’s often a sign of spamming rather than genuine candidate interest.

Spotting these similarities helps teams move beyond surface-level signals and identify abuse that would otherwise appear legitimate at a glance.

Step 2: Look for signs of automation during the application flow

Many bots reveal themselves in how they interact with the application. For instance, forms may be completed far faster than a human reasonably could. Screening questions are answered instantly. Navigation follows a perfectly consistent pattern from start to submit.

These behaviors can be evaluated in real time, without waiting for patterns to emerge over days or weeks. By watching for signals like unnatural speed, highly uniform interactions, or browser manipulation, teams can detect automated activity as it happens and intervene before low-quality submissions pile up.

Stop application bots before they overwhelm your pipeline

Bot-driven job application fraud doesn’t have to reach a breaking point before teams respond. With the right signals in place, it’s possible to detect automated activity early, reduce job application spam, and protect the experience for real candidates.

If you’re seeing unexplained spikes, broken funnel metrics, or repeated application patterns, now is a good time to take a closer look. Start a free trial to see how device intelligence can help you identify suspicious behavior earlier in the application journey, or contact our sales team to talk through how your application flow works today and where automation could be entering the pipeline.

Ready to detect job application fraud?

Install our JS agent on your website to uniquely identify the browsers that visit it.

FAQ

Blocking application bots works best when teams apply friction selectively rather than universally. By evaluating device context, teams can identify activity that looks automated and apply additional checks only when the risk is high. Legitimate candidates move through the application process without disruption, while suspicious sessions are slowed down or stopped early.

No ATS platform is immune. Bot-driven application fraud typically targets high-visibility platforms with open application flows or multiple integrations. Risk depends less on the ATS vendor and more on how application activity is evaluated. Platforms that rely only on basic or outdated controls are more likely to miss automated submissions.