What Is Content Scraping?

Content or web scraping extracts valuable data from websites using automated scripts or bots. If your website contains expensive data to collect or compute (e.g., flight connections, real-estate listings, product prices, or user data), a bad actor or competitor could steal and use it for nefarious purposes. Your data could also be scraped by browsing plugins for generative AI models like ChatGPT and used to answer user queries or train large language models without your knowledge.

Bots vary in their ability to scrape content and avoid detection. Simple scripts using an HTTP library like wget can retrieve pages from a web server and parse information from the HTML response. They can be effective for scraping static sites but less efficient for dynamic client-rendered content. They are also easier to detect as your website can easily test its inability to execute JavaScript.

Headless browsers and browser automation tools like Puppeteer or Selenium are much more sophisticated. They can execute JavaScript, scroll, press buttons, wait for client-rendered content to load, and scrape it. They are full-featured browsers, only automated, which makes them more robust and harder to detect. Many also have “stealth” plugins, which try to make them resemble regular browsers.

Please review our article on understanding content scraping to learn more about how it works and its impact on website owners.

Protect Your Content from Sophisticated Bots

A web application firewall (WAF) can provide an essential layer of rule-based protection, such as blocking IP ranges, countries, and data centers known to host bots. This first line of defense is helpful but sometimes insufficient, as scrapers can use proxies to cycle through different IP addresses.

You can ask your visitors to prove they’re human by completing CAPTCHA challenges, like picking all the images that contain a sombrero. This is generally effective but also disruptive to the user experience. To fight bots without bothering humans, you can use a client-side library to detect bots at runtime by analyzing the visitor’s browser.

Fingerprint Pro Bot Detection collects vast amounts of browser data that bots leak (errors, network overrides, browser attribute inconsistencies, API changes, and more) to reliably distinguish real users from headless browsers, automation tools, their derivatives, and plugins.

It is based on BotD — a free and open-source library that we created that detects simple bots running entirely in the client. Fingerprint Pro Bot Detection, however, can detect a broader range of sophisticated bots and runs the analysis on the server side, where it’s not vulnerable to tampering by bots themselves. See our documentation for a detailed comparison of BotD and Fingerprint Pro Bot Detection. The example below uses the non-open-source version.

Integrate Fingerprint Bot Detection into Your Website

First, sign up for a Fingerprint account. Bot detection is included in the Pro Plus plan as one of the Smart signals alongside incognito mode detection, VPN detection, browser tampering detection, and other data points useful for securing your website.

Add the JavaScript agent to your website client. Once enabled, you can use the same JavaScript agent for visitor identification and Bot Detection. We have client libraries for all significant front-end frameworks, or you can load the script from our CDN as shown below:

// Initialize the agent.

const fpPromise = import("https://metrics.yourdomain.com/web/v3/<your-public-api-key>").then(

(FingerprintJS) =>

FingerprintJS.load({

endpoint: "https://metrics.yourdomain.com",

})

);Note: We recommend routing requests to Fingerprint CDN and API through your domain for production deployments as demonstrated above. This prevents disruption from ad blockers and improves accuracy. You can learn multiple ways to do this in our guide on protecting your JavaScript agent from ad blockers.

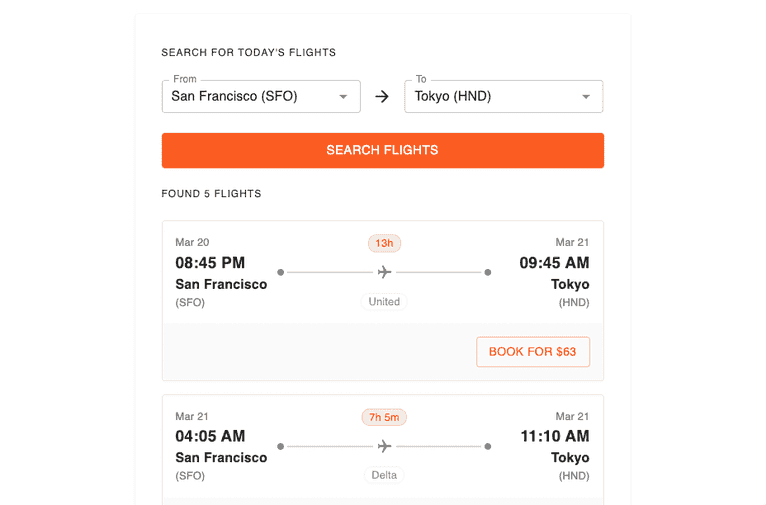

Let’s use an airline website as an example. The visitor picks their destination and clicks “Search flights.” Before returning the results from the server, you want to ensure they are not a bot.

On the client, right before requesting the flight data, use the loaded fpPromise to send browser parameters to Fingerprint Pro API for analysis. You will get a requestId in the response. Include it in the search request you send to your server.

async function onClickSearchFlights(from, to) {

// Collect browser signals for bot detection and send them

// to the Fingerprint Pro API. The response contains a `requestId`.

const { requestId } = await (await fpPromise).get();

// Pass the `requestId` to your server alongside the flights query

const response = await fetch(`/api/flights`, {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({ from, to, requestId }),

});

}Note: Fingerprint Pro must collect signals from the browser to detect bots. Therefore, it is best used to protect data endpoints accessible from your website, as this article demonstrates. It is not designed to protect server-rendered or static content sent to the browser on the initial page load, as browser signals are unavailable during server-side rendering.

On the server, send the requestId to Fingerprint Pro Server API to get your bot detection result. If the requestId is malformed or not found, it will not return the flight results. You can call the Server API REST endpoint directly or use one of our Server SDKs. Here is an example using the Node.js SDK:

import {

FingerprintJsServerApiClient,

Region,

} from "@fingerprintjs/fingerprintjs-pro-server-api";

export default async function getFlightsEndpoint(req, res) {

const { from, to, requestId } = req.body;

let botDetection;

try {

// Initialize the Server API client.

const client = new FingerprintJsServerApiClient({

region: Region.Global,

apiKey: "<YOUR_SERVER_API_KEY>",

});

// Get the analysis event from the Server API using the `requestId`.

const eventResponse = await client.getEvent(requestId);

botDetection = eventResponse.products?.botd?.data;

} catch (error) {

// If getting the event fails, it's likely that the

// `requestId` was spoofed, so don't return the flight results.

res.status(500).json({

message: "RequestId not found, potential spoofing detected.",

});

return;

}

// Continue processing the botDetection result...

}The botDetection result returned from the Server API tells you if Fingerprint Pro detected a good bot (for example, a search engine crawler), a bad bot (an automated browser), or not a bot at all.

{

"bot": {

"result": "bad" // or "good" or "notDetected",

"type": "headlessChrome"

},

"userAgent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/110.0.5481.177 Safari/537.36",

"url": "https://yourdomain.com/search",

"ip": "61.127.217.15",

"time": "2023-09-08T16:43:23.241Z",

"requestId": "1234557403227.AbclEC",

}If the visitor is a malicious bot, return an error. Optionally, you could update your WAF rules to block the bot’s IP address in the future. See our Bot Firewall tutorial for more details.

// Determine if a bad bot was detected.

if (botDetection?.bot.result === "bad") {

res.status(403).json({

message: "Malicious bot detected, scraping flight data is not allowed.",

});

// Optionally: saveAndBlockBotIp(botDetection.ip);

return;

}At this point, you will know which fingerprinting requests are real and with no malicious bots detected. But you need to verify that the result actually belongs to this search request. The bot could have replaced the real requestId with an old one obtained manually some time ago. To check against replay attacks, you need to verify the freshness of the fingerprinting request:

// Fingerprinting event must be a maximum of 3 seconds old.

if (Date.now() - Number(new Date(botDetection.time)) > 3000) {

res.status(403).json({

message: "Old visit detected, potential replay attack.",

});

return;

}You also want to verify that the origin of the fingerprinting request matches the origin of the search request itself. Usually, both will be coming from your website’s domain.

const fpRequestOrigin = new URL(botDetection.url).origin;

if (

fpRequestOrigin !== req.headers["origin"] ||

fpRequestOrigin !== "yourdomain.com" ||

req.headers["origin"] !== "yourdomain.com"

) {

res.status(403).json({

message: "Origin mismatch detected, potential spoofing attack.",

});

return;

}Finally, verify that the fingerprinting request's IP matches the search request's IP.

if (botDetection.ip !== req.headers["x-forwarded-for"]?.split(",")[0]) {

res.status(403).json({

message: "IP mismatch detected, potential spoofing attack.",

});

return;

}Having verified the authenticity of the bot detection result, you can now confidently return the flights:

const flights = await getFlightResults(from, to);

res.status(200).json({ flights });Explore Our Web Scraping Prevention Demo

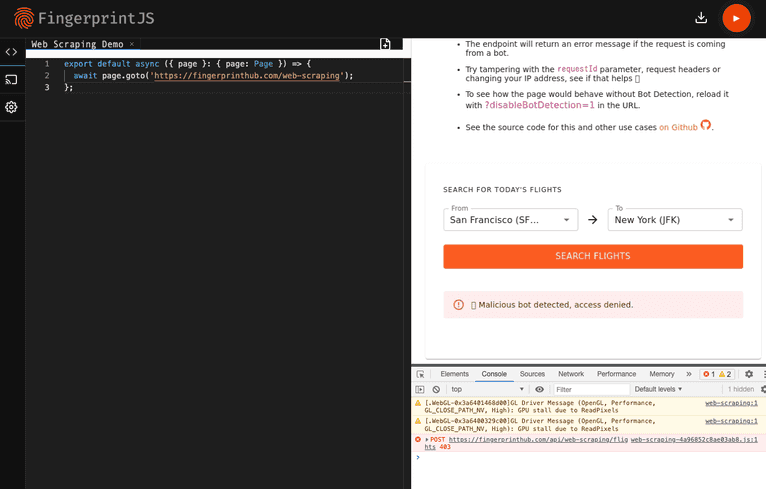

Visit the Web Scraping Prevention Demo we built to demonstrate the concepts above. You can explore the open-source code on Github or run it in your browser with CodeSandbox. The core of the use case is implemented in this component and this endpoint.

To see Fingerprint Pro Bot Detection in action, you must visit the use case website as a bot. The easiest way is to use a Browserless debugger, which allows you to control an automated browser in the cloud from your browser.

-

Go to the public Browserless instance.

-

Copy this snippet into the code editor:

export default async ({ page }: { page: Page }) => { await page.goto("https://demo.fingerprint.com/web-scraping"); }; -

Press the “Play” button in the top right to run the bot (and wait a few seconds).

-

In the developer console, in the bottom right, you can see a request made to

https://demo.fingerprint.com/api/web-scraping/flightsfailed with a 403 Forbidden error. Clicking on this will take you to the Network tab, where you can see the full response:{ "message": "🤖 Malicious bot detected, access denied.", "severity": "error", "type": "MaliciousBotDetected" }

The automated browser can’t access the flight search results when Bot Detection is enabled.

If you prefer to explore and test locally, the demo contains end-to-end tests. Execute them to see that you can scrape the flight results with Bot Detection disabled, but not otherwise.

git clone https://github.com/fingerprintjs/fingerprintjs-pro-use-cases

cd fingerprintjs-pro-use-cases

yarn install

yarn dev

# In a second terminal window

yarn test:e2e:chrome e2e/scraping/protected.spec.js --debug

yarn test:e2e:chrome e2e/scraping/unprotected.spec.js --debugIf you have any questions, please reach out to our support team. You can view this and other live technical demos and their source code on GitHub.

FAQ

Unusual spikes in traffic, frequent requests from the same IP address, and patterns of access that look like methodical data collection instead of normal user behavior.

Use rate limiting, employ IP address blocklisting from known bot sources, and analyze traffic to distinguish between bots and humans.