Summarize this article with

A new approach to content control and monetization

For publishers and content creators, sharing work online has always come with trade-offs. If you leave your site open, automated bots and AI crawlers can copy your content without permission. If you lock everything behind a paywall, you risk losing readers who would otherwise become loyal fans. There have been few ways to control who accesses your work, and even fewer ways to earn fair compensation from automated systems.

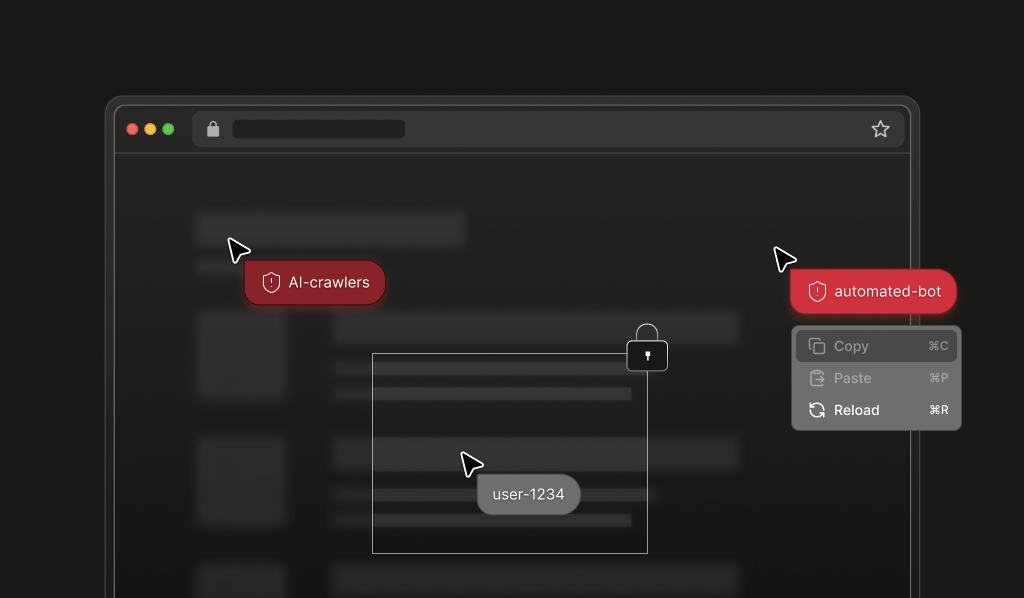

Until now. Cloudflare’s new pay per crawl feature changes this equation. With pay per crawl, publishers can decide which automated crawlers get in, which must pay, and which are blocked entirely. This brings new control and a fairer way to monetize valuable content, without blocking real visitors with restrictive paywalls.

How pay per crawl works

Pay per crawl lets publishers set rules for automated systems. When an AI crawler or other automated tool tries to access your website, you choose what happens next:

- Allow the crawler to access your content for free

- Charge a set price for each request

- Block the crawler entirely

Cloudflare manages the technical details to make this process smooth. Crawlers that want access must register with Cloudflare and set up secure authentication to prevent anyone from pretending to be that crawler. When a crawler visits your site, a special payment header is included with each request. If a payment is required, the crawler receives a clear message with the price and can decide to pay or not.

This system uses secure digital signatures, so only approved crawlers can reach protected material. Publishers don’t have to handle billing, security, or settlement themselves; Cloudflare takes care of it all behind the scenes.

Why pay per crawl is a step forward for publishers

Pay per crawl gives publishers real power over their content. Instead of chasing after AI companies or negotiating one-off deals, you can set clear, scalable rules for who can access your work and how much they pay. The system fits with existing web standards, so it’s easy to implement and doesn’t require new infrastructure.

You decide which crawlers are allowed, which pay, and which are blocked. This flexibility lets you create special partnerships, offer free access to certain researchers, or monetize your content broadly. For creators, it means finally earning revenue from the work you invest so much time and energy into, without losing control over who uses it and how.

This new feature also supports both proactive and reactive access. Some crawlers can indicate their willingness to pay upfront, while others learn about pricing only after making a request. This makes the system adaptable to many different use cases and business models.

The limitations: What pay per crawl cannot prevent

While pay per crawl is a strong step forward, it’s not a complete solution on its own. The system relies on automated crawlers playing by the rules — registering, authenticating, and paying when required. But not every bot is willing to do that.

Sophisticated fraudsters and malicious users have developed advanced ways to get around content protections. Here are a few common techniques:

- Residential proxy networks: Bots can route their requests through home internet connections, making their traffic look like they’re a real visitor. This makes it hard to spot automated scraping using only IP addresses.

- Browser automation tools: Tools like Selenium or Puppeteer have gotten good at mimicking human browsing. They execute JavaScript, handle cookies, and even simulate mouse movements, making them seem almost identical to real people.

- Distributed scraping: Some operations spread requests across thousands of IP addresses and user agents. Each individual request looks harmless, but together they can extract large amounts of content.

Because of these tactics, publishers need more than just access controls. They need deeper insight into who is visiting their site and how those visitors behave.

How Fingerprint fills the gaps

This is where Fingerprint’s device intelligence platform comes in. Fingerprint helps publishers spot and stop advanced bots and AI crawlers — even those using sophisticated evasion techniques.

Fingerprint uses over 100 signals from each browser and device to generate a unique visitor ID. This helps you tell the difference between legitimate users and suspicious visitors, even when someone tries to hide their identity by clearing cookies or switching browsers.

But the real power comes from Fingerprint’s 20+ Smart Signals. These signals give you detailed information about each visitor’s environment and behavior, making it easier to spot automated tools and fraud attempts.

For publishers that want to protect their content, several Smart Signals are especially helpful:

- Bot Detection: This Smart Signal identifies when a visitor is using automated tools and works in the background, so genuine users never see a "Verify you are a human" prompt or frustrating CAPTCHA challenge.

- Residential Proxy Detection: Many scrapers use residential proxies to hide their true origins. Fingerprint can detect when a request is coming through these networks, flagging suspicious activity that might otherwise look normal.

- VPN Detection: Fingerprint alerts you if a visitor is using a virtual private network (VPN) to hide their location or identity, helping you spot potential attempts to bypass payment or access controls.

- Browser Tampering Detection: Some bots try to avoid detection by altering browser settings or using anti-detect browsers. Fingerprint can spot these changes, revealing attempts to sneak past your defenses.

- High-Activity Device Detection: When a single device makes an unusually high number of requests, like for automated scraping, Fingerprint highlights this behavior, even if each request appears harmless on its own.

Fingerprint provides these real-time insights so that you can take action immediately. Whether you want to block suspicious visitors, require additional verification, or simply monitor for patterns, you have the information you need to stay in control, even when malicious crawlers don’t want to play nice.

Building a layered defense: Monetization plus protection

The introduction of pay per crawl gives publishers a practical way to earn revenue from automated access. It’s a welcome change for creators who want to be compensated fairly for their work. But as with any security measure, determined bad actors will keep looking for ways around it.

That’s why a layered approach works best. By combining pay per crawl with Fingerprint’s device intelligence, you create a strong, adaptable defense. Legitimate crawlers can pay for access and support your content, while advanced bots and fraudsters are identified and blocked before they can copy or steal material.

If you want to see how device intelligence can help you protect your content and revenue, you can try Fingerprint with a free trial or connect with our team to discuss how device intelligence can work alongside pay per crawl to keep your site secure and profitable.