Summarize this article with

The terms “AI agent” and “agentic AI” are everywhere these days as AI companies roll out large language model (LLM)-based digital assistants that can execute complex tasks. What do they mean for companies trying to prevent fraud on their websites?

The truth is that right now, it’s possible but tough to detect AI agents. Because they are designed to act on behalf of a human user, they often mimic human browsing behaviors. Some cloud-based AI agents like OpenAI’s Operator run on virtual machines in data centers with known IP addresses, but others like Anthropic’s Computer Use API act through a human user’s browser. Companies can block visitors from those known IP addresses, but they risk blocking legitimate customers at the same time.

AI agents are not (for now) an efficient way to commit fraud, due to their high costs, their limited functionality, and the amount of human intervention they require for steps like making credit card purchases. They also pose security problems that may hinder wider adoption by consumers until risk management tools evolve to better protect agents from hijacking.

Right now, companies face a bigger, long-standing issue: increasingly advanced bots that use sophisticated algorithms to impersonate humans, scrape content, or automate attacks. Confusingly, however, some people improperly call these AI agents too, so understanding the risks means first differentiating between bots that operate according to pre-programmed rules and agents that can plan and reason.

What are AI agents?

The term “AI agent” is used to describe many different kinds of digital automation. Since its coining in the mid-90s, the term has been applied to customer service chatbots, voice assistants like Siri or Alexa, and recommendation systems for shopping or streaming services. It’s also used sometimes to describe AI-powered bots that crawl the web or scrape for content. Basically, it can refer to any AI system that automates a task.

In this post, the term “AI agent” refers to a specific subset of LLM-based agents that use reasoning, planning, and memory to perform function calling and interact with other environments, such as a web browser or database. Humans can use natural language to ask these agents to complete multi-step tasks that require autonomous decision-making, such as booking a trip or making an online grocery purchase on the human’s behalf.

Some agents, like OpenAI’s Operator and Anthropic’s Computer Use API, are multimodal systems that can both read text and see images, allowing them to navigate web pages using computer vision. The goal of this kind of consumer-facing AI agent is to help people spend less time doing online errands.

AI agents are not yet efficient

However, AI agents are unlikely to become a major source of web traffic in the immediate future, with drawbacks that give both legitimate users and fraudsters plenty of reasons to hold off. A few of the challenges agents face include:

- Security risks: Agents are highly vulnerable to hijacking via prompt injection attacks and jailbreaking, exposing end users to fraud. OpenAI’s engineering lead for Operator said the agent poses “an incredible amount of safety challenges” that include fraud and unauthorized purchases by the agent.

- Ineffectiveness: The technology for LLM-based reasoning agents is still very new and not yet suited for more complex tasks. OpenAI noted when it released its Operator agent: “It performs best on short, repeatable tasks but faces challenges with more complex tasks and environments like slideshows and calendars.”

- Hallucinations: Because they are based on LLMs, AI agents will sometimes make up answers in response to queries. For example, if you ask an agent to find three hotels for your trip and it invents one of them, the introduction of bad information may cascade into the agent attempting to carry out bookings for places that don’t exist or booking an incorrect hotel.

- Slow processing times: Agents are built on top of models that are expensive to operate and require tons of compute, so they sometimes take longer to carry out steps within a larger task than a human would.

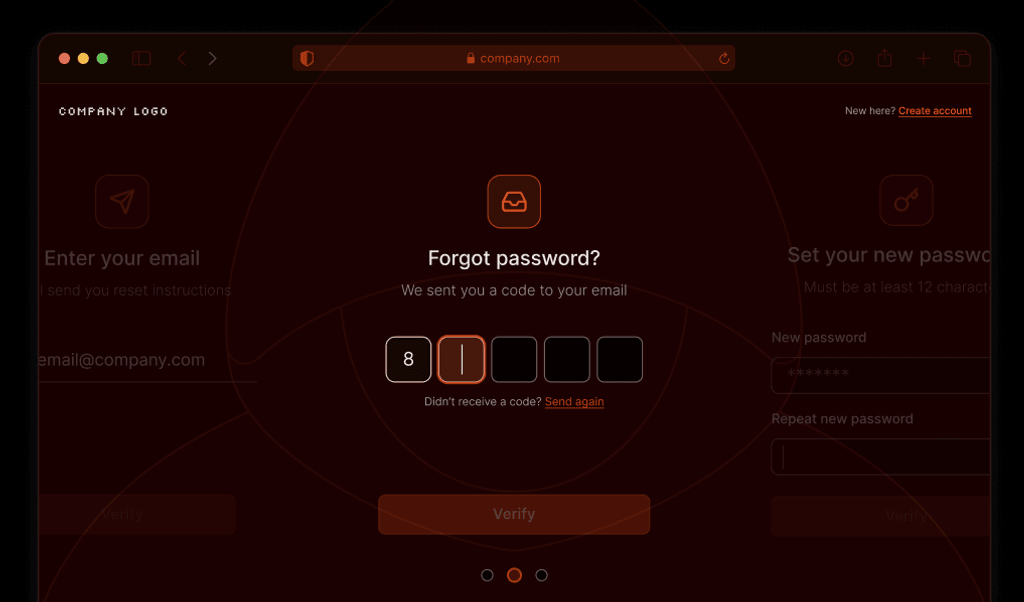

- Human intervention: When an agent comes to an interface that requires a login, a human must manually enter credentials such as a password. This means agents require frequent input to get a task done (although Operator has been known to carry out transactions without approval, which could lead to chargebacks).

It’s worth considering whether the risks from AI agents merit trying to block them. Because many of them will be acting on behalf of legitimate users and they aren’t yet a large overall chunk of traffic, it may make more sense not to block them (for now) so you don’t frustrate real customers.

Bot threats outweigh agentic AI risks

The much greater danger for fraud comes from bots, which now make up about half of all Internet traffic. In contrast to AI agents’ ability to perform many tasks across multiple environments, bots are automated applications typically purpose-built to accomplish a narrow goal. There are both good bots, like search engines that index content or collect data for research purposes, and bad bots, which can be used to steal customer data and make unauthorized purchases.

Generative AI tools that speed up coding are making it easier than ever to build bots for credential stuffing attacks, denial of service attacks, and card-cracking attempts. (However, bad bots themselves do not typically use AI.) Fraudsters rely on bots they can create cheaply and scale quickly to automatically conduct thousands of attacks in a short time. Any application using AI will take time and money to build and perform slower than a simple rules-based bot.

The damage caused by bots is enormous. A 2024 Thales estimate for annual global losses from bot attacks put the cost at $86-116 billion. The potential damage from AI agents is still largely theoretical since they have yet to be widely implemented.

How bot detection can help protect your business

Bad bots can overwhelm your servers, skew your analytics, scrape proprietary data, and enact multiple types of fraud if not detected and appropriately managed. Businesses that want to mitigate these issues need tools to distinguish human visitors from malicious bot traffic.

Fingerprint is a device intelligence platform that provides browser and device identification with industry-leading accuracy. Our Bot Detection Smart Signal collects large amounts of browser data that bots leak (errors, network overrides, browser attribute inconsistencies, API changes, and more) to allow you to tell human users apart from automated visitors. With that information, you can quickly take appropriate action, such as blocking a bot’s IP, withholding content, or asking for human verification.

The future of AI agent detection

AI agents are still in very early stages of development, and they pose safety challenges that will make users hesitant to adopt them until some of those risks can be mitigated. As a result, they are not yet worth the hassle and expense for fraudsters as a major tool or target.

As AI agents improve and their adoption grows, the internet will adapt to their presence: browsers and websites will be optimized for agents. And businesses will need to be able to tell them apart from humans, because humans and agents will interact with the web in very different ways — which means agents may drive the next evolution of device intelligence.